What is the Science of Sound? The lecture, “Good Vibrations: The Science of Sound,” was presented at the World Science Festival on June 4th, 2010. Four scientists along with a couple of musicians present how sound came to be.

Because people know that I’m researching the healing properties of music, I get some rather interesting, and often entertaining videos and articles sent my way. So, as you read this article, keep in mind that I really enjoy what you send me! This video, I actually found by myself. However, someone sent me a YouTube link and the Science of Sound was then “suggested” for me. With that, here’s my synopsis…

At the beginning of the video, the first presenter, Jamshed Bharucha, a neuroscientist at Tufts University, begins with some music theory and harmonics followed by describing pitch of the voice. Megan Curtis, one of his graduate students, and Bharucha, did a research study where they had actresses come in and speak phrases of sadness, anger, and happiness. (39 minutes into the video.) Megan believed there would be similarities and she was right. Actresses speaking sadness consistently spoke in descending minor thirds. Anger is an ascending minor second or an ascending augmented 4th (tri-tone). There were no pitch codes or specific intervals for happy emotions. Negative emotions were specific because they have consequences. For the happy emotions, there are no consequences. Note: the spoken words were determined through a computer program that could pinpoint specific pitches very specifically.

At the beginning of the video, the first presenter, Jamshed Bharucha, a neuroscientist at Tufts University, begins with some music theory and harmonics followed by describing pitch of the voice. Megan Curtis, one of his graduate students, and Bharucha, did a research study where they had actresses come in and speak phrases of sadness, anger, and happiness. (39 minutes into the video.) Megan believed there would be similarities and she was right. Actresses speaking sadness consistently spoke in descending minor thirds. Anger is an ascending minor second or an ascending augmented 4th (tri-tone). There were no pitch codes or specific intervals for happy emotions. Negative emotions were specific because they have consequences. For the happy emotions, there are no consequences. Note: the spoken words were determined through a computer program that could pinpoint specific pitches very specifically.

Another point that Bharucha made… It is known that animals communicate in pitch codes. They use sound in a chorusing way to develop social behavior because it brings them together. In a sense, music brings a fostering cohesion. As humans, we often create social bonds based on the music we listen to. Language is creative. When you hear language spoken in your own dialect, you don’t necessarily “hear” the pitch and sounds of your language. When you hear something you don’t recognize, it’s much easier to hear the pitches and inflections within that language. We are often not aware of the sound patterns in our own language.

Bharucha suggests that music is not really the universal language because of the differences in our music because we enjoy some music but not all music. (Examples given at 49 minutes into the video.) He states that we “speak” in pitches. To try this out for yourself, talk into a tuner and watch how your voice actually registers pitches. If you have a smart phone, simply download an app. I use the gStrings tuner because it actually tells you the specific Hertz of any given note.

Bharucha suggests that music is not really the universal language because of the differences in our music because we enjoy some music but not all music. (Examples given at 49 minutes into the video.) He states that we “speak” in pitches. To try this out for yourself, talk into a tuner and watch how your voice actually registers pitches. If you have a smart phone, simply download an app. I use the gStrings tuner because it actually tells you the specific Hertz of any given note.

The group Polygraph Lounge, presents entertaining music throughout the video. In addition, there are scientists who talk about the ear and how it receives sound. If that interests you, Christopher Shera discusses this at about 54 minutes into the video. Then, the sound artist, Jacob Kirkegaard, presents some interesting “music” made from inside the ear itself. Finally, we hear from an astronomer. Out of the entire video, his presentation is what I found the most striking.

Mark Whittle, an astronomer from the University of Virginia in Charlottesville, discusses the Big Bang and the slew of sounds that came with it. (1 hour and 22 minutes into the video). He said that we can see through the telescopes a delicate display of patches through a microwave background the sound waves that were present at the beginning of the universe. The big bang was a much more subtle process; more of an expansion. The universe emerged quiet but slightly rough, filling space in an expanding medium. What we now see was spread about uniformly. There is a hot glowing incandescent gas. The driving mechanism in the early universe was about gravity; the gas falls in and bounces out again. The universe is filled with an entire register of round or often spherical organ pipes. These are sort of spherical so the gasses are bouncing in and out again; it builds up, pushes out, then gravity pulls it back in again. The early universe was behaving like a musical instrument. The fundamental and harmonics are all there. The bigger the organ pipe, the deeper the note. These organ pipes were about 400 light years across so the pitches are way below our hearing range. These pitches help us probe the properties of the universe, which is what cosmologists are doing.

When taking one of the sounds and decoding it, the chord that is being played is between a minor and major third (1st harmonic). It captures both moods (sad and happy). We tend to associate minor keys with sadness and major keys with happiness. Within the first 100 years after the Big Bang, the tune started out as a minor third and developed to a major third. Cosmologists are able to analyze the nature of the universe by the sounds from the big bang forward in time. It’s what they call the “acoustic era” that can be traced from about 100 years after the big bang. The colors are incandescent colors now but they started out as ultra violet bright. As time passes, the register is added from the highest to the deepest notes. The most beautiful aspect of the idea is these organs pipes are denser and it’s ultimately where the first stars and galaxies formed. When you analyze web-like distribution of patterns, you can find the harmonic tones still present. Where the organ pipes were residing, that’s where there were more galaxies formed in the troughs. Where the gas was not falling in, that’s where the gaps between galaxies ultimately came (voids, clusters and filaments). So, cosmologists can see early sound waves that spawned the galaxies.

When taking one of the sounds and decoding it, the chord that is being played is between a minor and major third (1st harmonic). It captures both moods (sad and happy). We tend to associate minor keys with sadness and major keys with happiness. Within the first 100 years after the Big Bang, the tune started out as a minor third and developed to a major third. Cosmologists are able to analyze the nature of the universe by the sounds from the big bang forward in time. It’s what they call the “acoustic era” that can be traced from about 100 years after the big bang. The colors are incandescent colors now but they started out as ultra violet bright. As time passes, the register is added from the highest to the deepest notes. The most beautiful aspect of the idea is these organs pipes are denser and it’s ultimately where the first stars and galaxies formed. When you analyze web-like distribution of patterns, you can find the harmonic tones still present. Where the organ pipes were residing, that’s where there were more galaxies formed in the troughs. Where the gas was not falling in, that’s where the gaps between galaxies ultimately came (voids, clusters and filaments). So, cosmologists can see early sound waves that spawned the galaxies.

Whittle took the sounds and harmonics from the first 400,000 years (the acoustic era), brought them into hearing range and put them on a musical staff (50 octave shift). There is a changing chord after the “drop in” pitch (downward slide) that’s micro-tonal. The sounds from those first 400,000 years were put into a computer so cosmologists can understand how the sound developed from the big bang forward in time. The sound spectrum descends over those years because 100 years after the big bang, the organ pipes are allowed to sound, which lowers the pitch as time passes during the expansion process. This is how it’s possible to map the positions of the galaxies. These tones ended up spawning the first generations of stars which formed the galaxies we now inhabit.

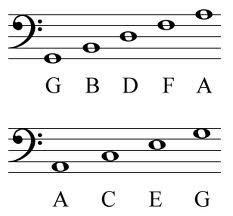

On the musical staff (1 hour and 39 minutes into the video), the pitches are forced into the closest match of the equal tempered scale. Those notes are: A-C-E-G-B-D-F. Notice how they nicely fit into the lines and spaces of the bass clef. In other words, all of the main seven notes of the scale are represented in the creation of the stars and galaxies. For those who understand music theory, you’ll notice this arrangement of notes are minor and major thirds. A – C = minor 3rd, C – E = Major 3rd, E – G = minor 3rd, G – B = Major 3rd, B – D = minor 3rd and finally, D – F = a minor 3rd. Until the last two, major and minor thirds alternate. I would be curious to know what happened during the times of those major and minor thirds. Are we in a time of a minor third now? I would assume that since new galaxies are being formed daily, those “organ pipes” are still playing. That’s not something addressed in the video but I am curious about what musical interval our universe is in now. It’s a good question to leave us with.

On the musical staff (1 hour and 39 minutes into the video), the pitches are forced into the closest match of the equal tempered scale. Those notes are: A-C-E-G-B-D-F. Notice how they nicely fit into the lines and spaces of the bass clef. In other words, all of the main seven notes of the scale are represented in the creation of the stars and galaxies. For those who understand music theory, you’ll notice this arrangement of notes are minor and major thirds. A – C = minor 3rd, C – E = Major 3rd, E – G = minor 3rd, G – B = Major 3rd, B – D = minor 3rd and finally, D – F = a minor 3rd. Until the last two, major and minor thirds alternate. I would be curious to know what happened during the times of those major and minor thirds. Are we in a time of a minor third now? I would assume that since new galaxies are being formed daily, those “organ pipes” are still playing. That’s not something addressed in the video but I am curious about what musical interval our universe is in now. It’s a good question to leave us with.

In a nutshell, music was in the beginning with us, crafting the structures we’re now used to. Galaxies and stars were created out of sound. Even in the Bible, when God created the earth, the term “hovered” should actually be translated “vibrated” to more closely match the original Hebrew meaning. Literally, God “vibrated” over the earth during the creation process. So, it appears in the Biblical account as well as the big bang, sound was important in the creation of our universe.

For those who want to watch the full video, enjoy…

© Del Hungerford, 2016

[mailerlite_form form_id=2]